Roadie now keeps the catalog in sync with your GitHub with the webhooks API!

By Miklos Kiss • October 4th, 2022

As a Roadie user, editing a Backstage YAML file in your GitHub repo will result in those changes almost immediately appearing in your Catalog. Our team designed and implemented a GitHub integration based on webhooks to replace the default poll-based discovery shipped in Backstage.

Previously, we relied on Backstage’s default behavior for keeping the catalog up to date. This was a pull-based approach where Roadie polls your GitHub and kept the catalog in sync.

By default, the polling interval was set to 2 minutes. This is a long time to wait while you are in the middle of editing your scaffolder templates and still figuring things out.

Polling large catalogs would also result in many requests being sent to the GitHub APIs. This could result in rate limiting and a degraded user experience.

With this release, we are utilizing the GitHub webhooks API to get notifications when you change your Backstage YAML files.

We also added a new feature to the GitHub integrations settings page to be able to manually trigger a sync with your GitHub repos. This is useful if you added a catalog-info.yaml files to a repository where you did not have the Roadie GitHub app installed.

The benefits for Roadie users

We believe this new webhooks based approach brings a number of benefits:

- We eliminated the usage of Location entities for discovery. We can spare the additional fetches for the whole organization repositories for every configured github-discovery Location entity.

- It results in an almost immediate reaction from the catalog when you push something to your configured branches.

- Now you can safely rename your catalog files in your GitHub repo. (This will result in a deleted filename for the old file and an added one for the new file)

- It can refresh your API entity when the referenced e.g. openapi/grpc file is changed (if it is hosted in GitHub)

Read on for more technical juicy details about the implementation.

Tech Stuff

Let me walk you through this journey to implement and roll out instant updates for Roadie users!

The Past

Before webhooks, we relied on the default implementation of auto-discovery from Backstage. This used the processing loop, and the provided processors to ingest entities from GitHub organizations. We used the GithubDiscoveryProcessor from OSS Backstage.

It works like this:

- This processor is configured and added to your catalog builder.

- This processor is evaluated on every entity when it is processed that should this run or not.

- This processor will execute its logic when an entity is processed that is a Location entity and its type is

github-discovery - It fetches all of your repositories from your organization then creates an optional Location entity for every repo.

- These Location entities then will be processed and they are going to fetch the files and emit the entities that they found in the target paths.

This processor has 2 main drawbacks:

- It is tied to the processing loop so you cannot set a different interval for it. This is a problem if you’re being rate limited by GitHub. There is no option to lengthen the loop duration.

- It makes unnecessary requests towards GitHub API by fetching all of the repositories every time it runs.

The present

We built a Roadie-specific entity provider which can act on the incoming GitHub webhooks.

It uses your configured Roadie Backstage GitHub app to forward the GitHub push events from your organization’s repositories to our servers.

The GitHub webhooks API sends Roadie the modified, added, deleted array of files. This indicates what happened in this event. The provider differentiates the modified and added/deleted events.

When a modification event happens:

- we get the event from GitHub

- Get all the modified filenames in this push event

- trigger a refresh on the Backstage database

We will try to refresh with every filename and let the database decide if there was a matching entity to schedule the refresh. This was implemented this way because it enables us to provide an instant refresh on API entities when a referenced $text placeholder’s value is managed in GitHub and you change that open API descriptor we will refresh the API entity that it belongs to.

When the event contains additions/deletions:

- Get the event from GitHub

- Construct a set of filenames for added files

- Construct a set of filenames for deleted files

- Filter these based on the configuration

- Create an optional Location entity for these files with proper location annotations

This path is pretty similar to the previous discovery. We are creating Location entities where the location’s spec.target will point to the file that we got in the GitHub event. For every added/deleted file that matches your configuration and we rely on the processing loop fetch and emit the actual content of the file.

We removed the polling for entities, and we disabled the possibility to add github-discovery Location entities to the catalog.

Some things to iron out

With the current implementation, some edge cases can be confusing or not work as expected.

Multiple entities in one file (catalog-info.yaml)

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: valid-same-file-entity-1

spec:

type: library

owner: user:kissmikijr

lifecycle: production

---

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: valid-same-file-entity-2

spec:

type: library

owner: user:kissmikijr

lifecycle: productionThis approach you can cause undesired behaviour if you end up with a validation error in one of your Entities.

If this happens the catalog will create a location entity which will point to this file, however, the processing of the entities won’t finish, this means Backstage will not store the correct information to be able to trigger refreshes and even though you fix your validation errors in the next commit you’ll need to wait for the regular processing loop to handle the refresh.

Registering an Entity via the /register-existing-component page

In this case, because this entity was not added to the catalog via the webhooks, when you delete this file from your GitHub repo the webhook won’t be able to remove it.

Updating this entity will be instant.

Using the Location kind

If you used Location entities before in your repository to register this and let the processing loop find the other targets.

apiVersion: backstage.io/v1alpha1

kind: Location

metadata:

name: roadie-backstage-plugins

spec:

targets:

- ./plugins/**/catalog-info.yaml

- ./utils/**/catalog-info.yamlThe automatic refreshes will not work on the target entities.

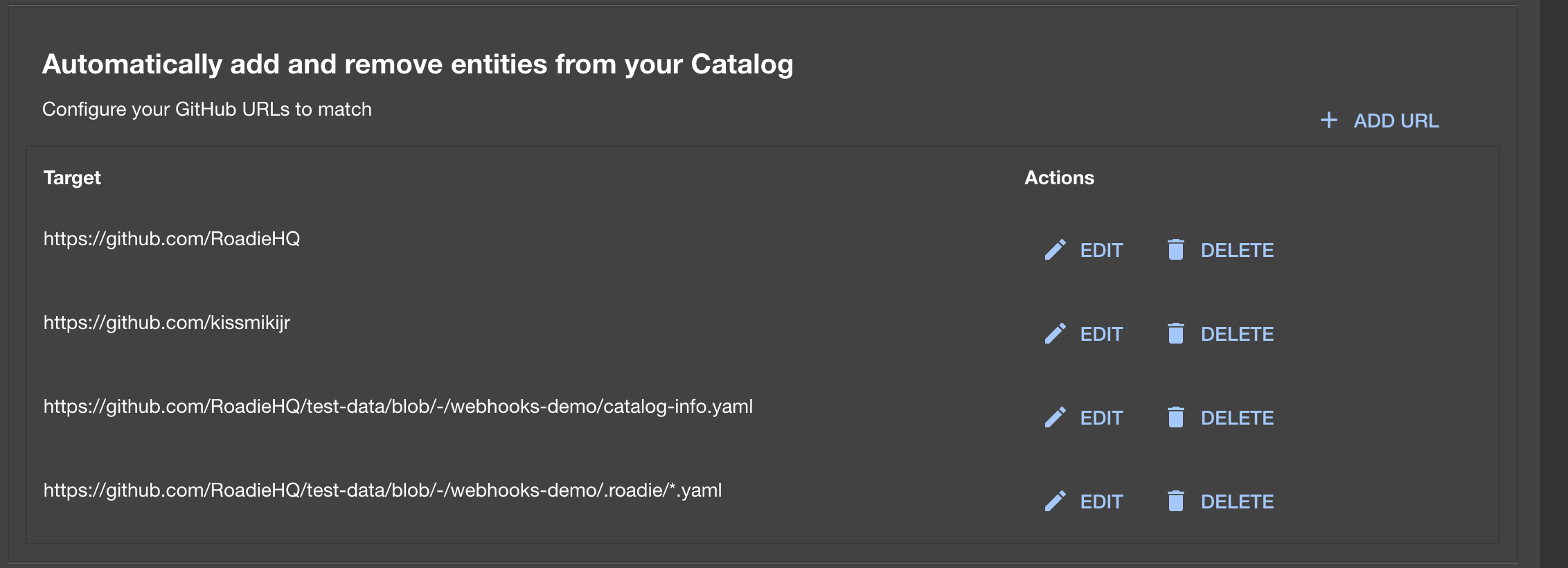

This is a shortcoming of the open source implementation of the refresh handling. It is planned to be fixed. Until then, the best advice is to ditch the top-level Locations and configure the targets in the /administration/settings/integrations/github configuration page.

In this case, you’d add two entries to the Targets:

# Entry 1

https://github.com/RoadieHQ/roadie-backstage-plugins/blob/-/plugins/**/catalog-info.yaml

# Entry 2

https://github.com/RoadieHQ/roadie-backstage-plugins/blob/-/utils/**/catalog-info.yaml To configure your targets check out the documentation.